Quantum computing startup IonQ today announced its road map for the next few years — following a similar move from IBM in September — and it’s quite ambitious, to say the least.

At our Disrupt event earlier this year, IonQ CEO and president Peter Chapman suggested that we were only five years away from having desktop quantum computers. That’s not something you’ll likely hear from the company’s competitors — who also often use a very different kind of quantum technology — but IonQ now says that it will be able to sell modular, rack-mounted quantum computers for the data center in 2023 and that by 2025, its systems will be powerful enough to achieve broad quantum advantage across a wide variety of use cases.

In an interview ahead of today’s announcement, Chapman showed me a prototype of the hardware the company is working on for 2021, which fits on a workbench. The actual quantum chip is currently the size of a half-dollar and the company is now working on essentially putting the core of its technology on a single chip, with all of the optics that make its system work integrated.

“That’s the goal,” he said about the chip. “As soon as you get to 2023, then you get to go to scale in a different way, which is, I just tell somebody in Taiwan to start and give me 10,000 of those things. And you get to scale through manufacturing, as well. There’s nothing quantum in any of the things that in any of the hardware we’re doing,” he said, though IonQ co-founder and chief scientist Chris Monroe quickly jumped in and noted that that’s true, “except for the atoms.”

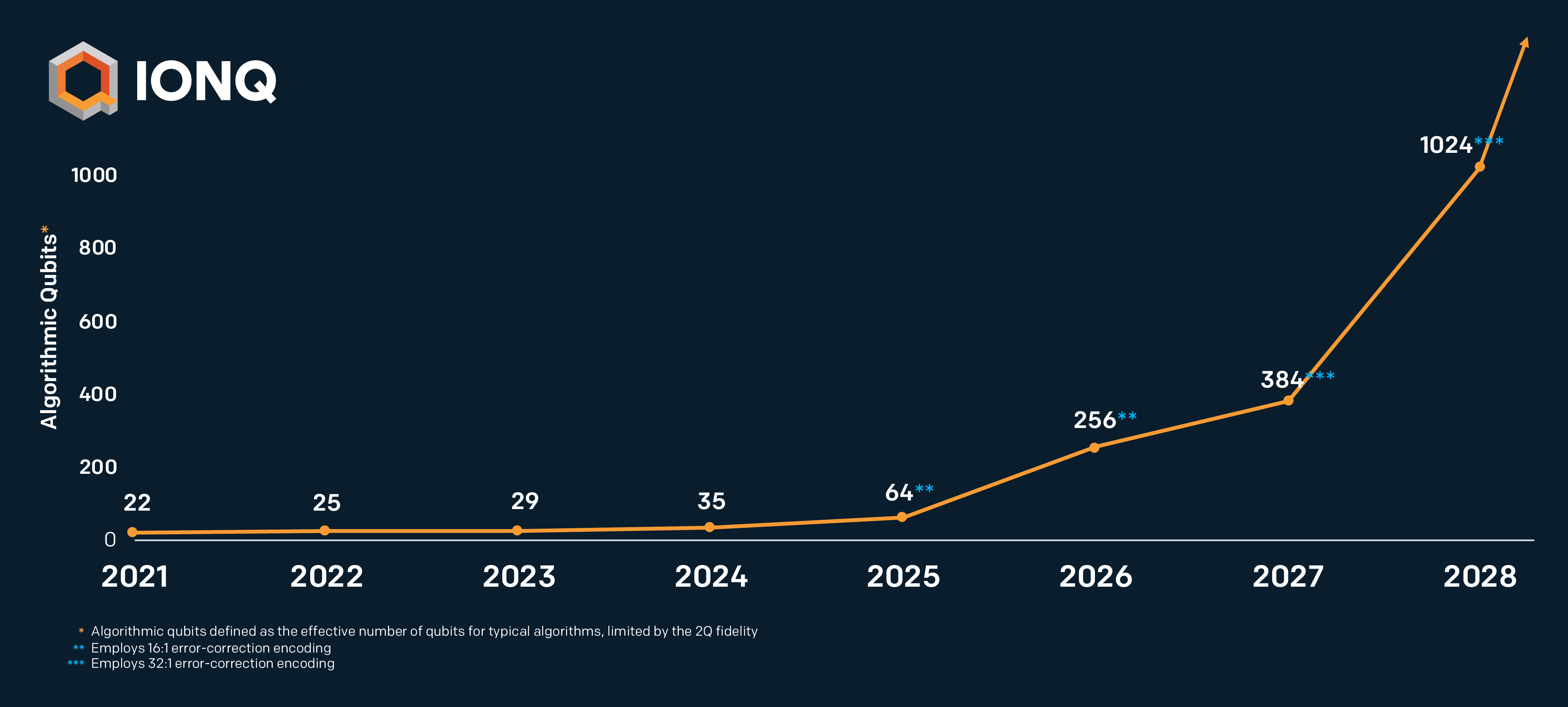

And that’s an important point, because thanks to betting on trapped ion quantum computing as the core technology for its machines, IonQ doesn’t have to contend with the low-temperatures that IBM and others need to run their machines. Some sceptics have argued that IonQ’s technology will be hard to scale, but that’s something Chapman and Monroe easily dismiss, and IonQ’s new road map points at systems with thousands of algorithmic qubits (which are made out of a factor of 10 or 20 more physical qubits for handling error corrections) by 2028.

“As soon as you hit about 40 qubits — algorithmic qubits — in the beginning of 2024, then you’ll start to see quantum advantage probably in machine learning,” Chapman explained. “And then, I think it’s pretty well accepted that at 72 qubits is roughly when you start to do quantum advantage fairly broadly. So that would be in 2025. When you start to get into 2027, you’re now getting into hundreds of qubits if not early 1000s of qubits in [2028]. And now you’re starting to get into full-scale fault tolerance.”

We’ll see slow growth in the number of additional algorithmic qubits — which is what IonQ calls a qubit that can be used in running a quantum algorithm. Others in the industry tend to talk about “logical qubits,” but IonQ’s definition is slightly different.

Talking about how to compare different quantum systems, Chapman noted that “fidelity is not good enough.” It doesn’t matter if you have 72 or 72 million qubits, he said, if only three of those are usable. “When you see a road map that says, ‘I’m going to have umpteen 1000 qubits, it’s kind of like, ‘I don’t care, right?’ On our side, since we’re using individual atoms, I could show you a little vial of gas and say, ‘look, I’ve got a trillion qubits, all ready to be do computation!’ But they’re not particularly useful. So what we tried to do in the road map, was to talk about useful qubits.”

He also argued that quantum volume, a measurement championed by IBM and others in the quantum ecosystem, isn’t particularly useful because at some point, the numbers just get far too high.” But essentially, IonQ is still using quantum volume, but it defines its algorithmic qubits as the of the quantum volume of a given system.

Once IonQ is able to get to 32 of these algorithmic qubits — its current systems have 22 — it’ll be able to achieve a quantum volume of 4.2 billion instead of the 4 million it claims for its current system.

As Monroe noted, the company’s definition of algorithmic qubits also takes variable error correction into account. Error correction remains a major research area in quantum computing, but for the time being, IonQ argues that its ability to keep gate fidelity high means it doesn’t yet have to worry about it and it has already demonstrated the first fault-tolerant error-corrected operations with an overhead of 13:1.

“Because our native errors are so low, we don’t need to do error correction today, with these 22 algorithmic qubits. But to get that [99.99%] of fidelity, we’re going to leak in a little bit of error correction — and we can sort of do it on the fly. It’s almost like a little bit of an adjustment. How much error correction do you want? It’s not all or nothing,” Monroe explained.

IonQ isn’t afraid to say that it believes that “other technologies, because of their poor gate fidelity and qubit connectivity, might need 1,000, 10,000 or even 1,000,000 qubits to create a single error-corrected qubit.”

To put all of this into practice, IonQ today launched an Algorithmic Qubit Calculator that it argues will make it easier to compare system.

For the near future, IonQ expects to use a 16:1 overhead for error correction — that is, it expects to use 16 physical qubits to create a high-fidelity algorithmic qubit. Once it hits about 1,000 logical qubits, it expects to use a 32:1 overhead. “As you add qubits, you need to increase your fidelity,” Chapman explained, and so for its 1,000-qubit machine in 2028, IonQ will need to control 32,000 physical qubits.

IonQ has long argued that scaling its technology doesn’t necessitate any technological breakthroughs. And indeed, the company argues that by packing a lot of its technology on a single chip, its system will become more stable by default (noise, after all, is the archenemy of qubits), in part because the laser beams won’t have to travel very far.

Chapman, who has never been afraid to push for a bit of publicity, even noted that the company wants to fly one of its quantum computers in a small plane one of these days to show how stable it is. It’s worth noting, though, that IonQ is far more bullish about scaling up its systems in the short term than any of its competitors. Monroe acknowledged as much, but argues that it’s basic physics at this point.

“Especially in the solid-state platforms, they’re doing wonderful physics,” Monroe said. “They’re making a little bit of progress every year, but a roadmap in 10 years, based on a solid-state qubit, relies on breakthroughs in material science. And maybe they’ll get there, it’s not clear. But, you know, the physics of the atom is all sewn up and we’re very confident on the engineering path forward because it’s engineering based on proven protocols and proven devices.”

“We don’t have a manufacturing problem. You want a million qubits? No problem. That’s easy,” Chapman quipped.

source https://techcrunch.com/2020/12/09/ionq-plans-to-launch-a-rack-mounted-quantum-computer-for-data-centers-in-2023/

0 Comments