TikTok this morning announced a new set of Community Guidelines that aim to strengthen its existing policies in areas like harassment, dangerous acts, self-harm and violence, alongside the introduction of four new features similarly focused on the community’s well-being. These include updated resources for those struggling with self-harm or suicide, opt-in viewing screens that hide distressing content, a text-to-voice feature to make TikTok more accessible and an expanded set of COVID-19-related resources.

While many of the topics were already covered by TikTok’s Community Guidelines ahead of today’s changes, the company said the updates add more specifics to each of the areas based on what behavior it’s seen on the platform, heard through community feedback and received via input from experts such as academics, civil society organizations and TikTok’s own Content Advisory Council.

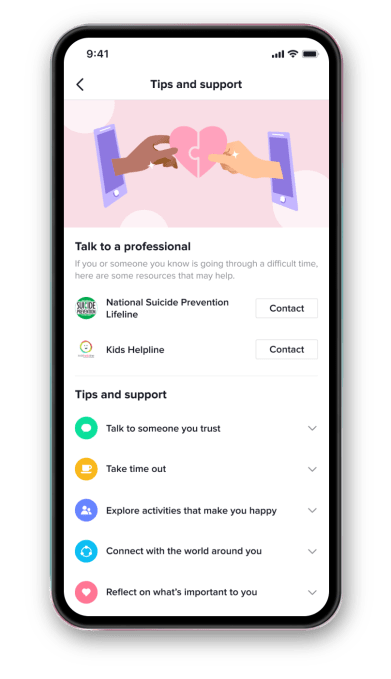

One update is to the guidelines related to suicide and self-harm, which have now incorporated feedback and language used by mental health experts to avoid normalizing self-injury behaviors. Specifically, TikTok’s policy on eating disorder content has added considerations aimed at prohibiting the normalization of or the glorification of dangerous weight loss behaviors.

Image Credits: TikTok

Stronger policies on bullying and harassment now further detail the types of content and behaviors that aren’t welcome on TikTok, including doxxing, cyberstalking and a more extensive policy on sexual harassment. This one is particularly interesting, given that there have been some cases of TikTok users figuring out where anti-masker nurses worked — and at least one incident led to the nurse being put on leave. It’s unclear how TikTok will approach this sort of “doxxing” behavior, however, as it didn’t involve publishing a home address — only alerting an employer.

Another update expanded the guidelines around TikTok’s dangerous acts policy to more explicitly limit, label or remove content depicting dangerous acts and challenges. Through a new “harmful activities” section to the minor safety policy, TikTok reiterates that content promoting dangerous dares, games and other acts that may jeopardize the safety of youth is prohibited.

TikTok also updated its policy around dangerous individuals and organizations to focus on the issue of violent extremism. The new guidelines now describe in greater detail what’s considered a threat or incitement to violence, as well as the content that will be prohibited. This one is timely, too, as many Trump supporters have been pushing for a new civil war or other violence as a result of Trump losing the U.S. presidential election.

In terms of new features, TikTok worked with behavioral psychologists and suicide prevention experts — including Providence, Samarita

TikTok will also introduce new opt-in viewing screens that will appear on top of videos of content some may find graphic or distressing. These videos are already ineligible for the For You feed, but may not be prohibited. For example, the screens might cover violence or fighting that’s not being removed due to documentary reasons; animals in nature doing something some find upsetting — like hunting and killing their prey; or otherwise scary stuff, like horror clips.

When TikTok becomes aware of this disturbing content through user flagging, it will apply the screens to the videos to reduce unwanted viewing. Users who then come across the video can either tap the button at the bottom of the screen to “skip video” or the other to “watch anyway.”

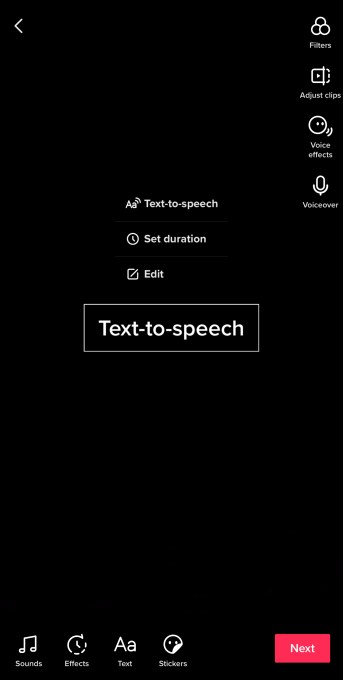

In addition, a new text-to-voice feature, aimed at accessibility, allows people to convert their typed text to a voice that plays over it in a video. This follows TikTok’s recent launch of a feature to support people with photosensitive epilepsy.

Image Credits: TikTok

TikTok is also adding questions and answers about COVID-19 vaccines to its in-app coronavirus resource hub. These will be provided by public health experts, like the Centers for Disease Control (CDC), for example, and will be linked to from the Discover page, search results and via banners on COVID-19 and vaccine-related videos. The company says its COVID-19 hub has already been viewed more than 2 billion times in the last six months. TikTok is partnering with Team Halo as well, so scientists all over the world can share the progress being made on the vaccine through video updates.

TikTok has been fairly aggressive about moderating content on its platform. If you scroll through the feed long enough, you’ll find users lamenting about videos of theirs that were taken down for policy violations. You’ll also come across videos where users have reuploaded another person’s deleted video in order to respond to it. It even quickly addressed much of the election misinformation that was being spread in November by blocking top hashtags, like #RiggedElection and #SharpieGate.

Its new policies out today also include changes that address what’s been more recent user behavior, like the calls for violence following the election.

“Keeping our community safe is a commitment with no finish line,” said TikTok, in its announcement today about its updates. “We recognize the responsibility we have to our users to be nimble in our detection and response when new kinds of content and behaviors emerge. To that end, we’ll keep advancing our policies, developing technology to automatically detect violative content, building features that help people manage their TikTok presence and content choices, and empowering our community to help us foster a trustworthy environment. Ultimately, we hope these updates enable people to have a positive and meaningful TikTok experience,” the company said.

source https://techcrunch.com/2020/12/15/tiktok-expands-community-guidelines-rolls-out-new-well-being-focused-features/

0 Comments