Facebook has quietly published internal research that was earlier obtained by the Wall Street Journal — and reported as evidence the tech giant knew about Instagram’s toxic impact on teenaged girls’ mental health.

The two slide decks can be found here and here.

The tech giant also said it provided the material to Congress earlier today.

However Facebook hasn’t simply released the slides — it has added its own running commentary which seeks to downplay the significance of the internal research following days of press commentary couching the Instagram teen girls’ mental health revelations as Facebook’s ‘Big Tobacco’ moment.

Last week the WSJ reported on internal documents its journalists had obtained, including slides from a presentation in which Facebook appeared to acknowledge that the service makes body image issues worse for one in three teen girls.

The tech giant’s crisis PR machine swung into action — with a rebuttal blog post published on Sunday.

In a further addition now the tech giant has put two internal research slide decks online which appear to form at least a part of the WSJ’s source material. The reason it has taken the company days to publish this material appears to be that its crisis PR team was busy figuring out how best to reframe the contents.

The material has been published with some light redactions (removing the names of the researchers involved, for example) — but also with extensive ‘annotations’ in which Facebook can be seen attempting to reframe the significance of the research, saying it was part of wider, ongoing work to “ensure that our platform is having the most positive impact possible”.

It also tries to downplay the significant of specific negative observations — suggesting, for example, that the sample size of teens who had reported problems was very small.

“The methodology is not fit to provide statistical estimates for the correlation between Instagram and mental health or to evaluate causal claims between social media and health/well-being,” Facebook writes in an introduction annotation on one of the slide decks. Aka ‘nothing to see here’.

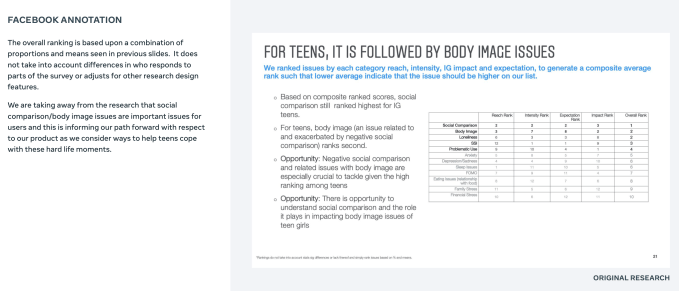

Later on, commenting on a slide entitled “mental health findings” (which is subtitled: “Deep dive into the Reach, Intensity, IG Impact, Expectation, Self Reported Usage and Support of mental health issues. Overall analysis and analysis split by age when relevant”), Facebook writes categorically that: “Nothing in this report is intended to reflect a clinical definition of mental health, a diagnosis of a mental health condition, or a grounding in academic and scientific literature.”

While on a slide that contains the striking observation that “Most wished Instagram had given them better control over what they saw”, Facebook nitpicks that the colors used by its researchers to shade the cells of the table which presents the data might have created a misleading interpretation — “because the different color shading represents very small difference within each row”.

If the sight of Facebook publicly questioning the significance of internal work and quibbling with some of the decisions made by its own researchers seems unprepossessing, remember that the stakes of this particular crisis for the adtech giant are very high.

The WSJ’s reporting has already derailed a planned launch of a ‘tweens’ version of the photo sharing app.

While US lawmakers are also demanding answers.

More broadly, there are global moves put child protection at the center of digital regulations — such as the UK’s forthcoming Online Safety Act (while its Age Appropriate Design Code is already in force).

So there are — potentially — very serious ramifications for how Instagram will be able to operate in the future, certainly vis-a-vis children and teenagers, as regulations get drafted and passed.

Facebook’s plan to launch a version of Instagram for under 13s emerged earlier this year, also via investigative reporting — with Buzzfeed obtaining an internal memo which described “youth work” as a priority for Instagram.

But on Monday CEO Adam Mosseri said the company was “pausing” ‘Instagram kids’ to take more time to listen to the countless child safety experts screaming at it to stop in the name of all that is good and right (we paraphrase).

Whether the social media behemoth will voluntarily make that “pause” permanent looks doubtful — given how much effort it’s expending to try to reframe the significance of its own research.

Though regulators may ultimately step in and impose child safety guardrails.

“Contrary to how the objectives have been framed, this research was designed to understand user perceptions and not to provide measures of prevalence, statistical estimates for the correlation between Instagram and mental health or to evaluate causal claims between Instagram and health/well-being,” Facebook writes in another reframing notation, before going on to “clarify” that the 30% figure (relating to teenaged girls who felt its platform made their body image issues worse) “only” applied to the “subset of survey takers who first reported experiencing an issue in the past 30 days and not all users or all teen girls”.

So, basically, Facebook wants you to know that Instagram “only” makes mental health problems worse for fewer teenage girls than you might have thought.

(In another annotation it goes on to claim that “fewer than 150 teen girls spread across… six countries answered questions about their experience of body image and Instagram”. As if to say, that’s totally okay then.)

The tech giant’s wider spin with the annotated slides is an attempt to imply that its research work shows proactive ‘customer care’ in action — as it claims the research is part of conscious efforts to explore problems experienced by Instagram users so that it can “develop products and experience for support”, as it puts it.

Yeah we lol’d too.

After all, this is the company that was previously caught running experiments on unwitting users to see if it could manipulate their emotions.

In that case Facebook succeeded in nudging a bunch of users who it showed more negative news feeds to to post more negative things themselves. Oh and that was back in 2014! So you could say emotional manipulation is Facebook’s DNA…

But fast forward to 2021 and Facebook wants you the public, and concerned parents everywhere, as well as US and global lawmakers who are now sharpening their pens to apply controls to social media not to worry about teenagers’ mental health — because it can figure out how best to push their buttons to make them feel better, or something.

Turns out, when you’re in the ad sales business, everything your product does is an A/B test against some poor unwitting ‘user’…

Screengrab from one of Facebook’s annotated slide decks released in response to the WSJ’s reporting about teen Instagram users’ mental health issues (Screengrab: Natasha Lomas/TechCrunch.)

source https://techcrunch.com/2021/09/30/seeking-to-respin-instagrams-toxicity-for-teens-facebook-publishes-annotated-slide-decks/

0 Comments